*How is it harmful, again? For which people?

The extremely (not) surprising key findings of a a paper about "Robots, meaning, and self-determination", says the Register, would be that "robots harm work meaningfulness and autonomy". It seems to me that such findings, or at least the way they are presented, overlooks something that should be even more obvious.

The study focused not on the purely monetary impacts of industrial automation, but on how industrial robots affect both the meaning of work, and the self-determination of workers.

What it found is that the adoption of industrial robots "takes a mental toll on workers, especially those with repetitive jobs [and] routine responsibilities". That toll consists of diminished need for creative problem solving, reduced worker autonomy and reduced human interactions to the detriment of work relationships.

Quantitatively speaking, the study found that the average increase in robotization between 2005 and 2020 was almost a four-fold (389%), and as much as 26 times in industries like mining and quarring, and that doubling the presence of robots lead to a 0.9 percent decline in work meaningfulness and a 1 percent decline in autonomy.

The authors also concluded that one way to mitigate the loss of autonomy is to give workers control: "Specifically, working with computers completely offsets the negative consequences of automation for autonomy". That is, robots make workers feel worse - unless they control (or "teach", that is program) the machines, robotics or not.

Forms and consequences of "Reduced human interaction"

Robots are just software, after all. Whatever the exact amount of alienation from industrial robots is, the problem surely exists, and exists with every kind of automation and, albeit in different forms, in almost every sector of the economy, including retail or any kind of office or "knowledge" work.

When it comes to interaction between workers and their end users or customers, for example, much automation just hides and alienates the former, rather than replacing them. The last perfect example of this issue is the Amazon Just Walk Out stores which only seemed completely automated, because they had more than 1,000 people in India, obviously paid much less than western cashiers, watching and labeling videos to ensure accurate checkouts.

In factories, warehouses and offices, instead, "reduced human interactions that harm work relationships| are the unavoidable consequence of one fact, that's much older than computers and software. In order to understand, reproduce and manage any kind of complex process, from photosynthesis to satellite building, science and technology must split that process into as many elementary parts as possible.

When coupled with capitalism, mass production and bureaucratization of life, that fact unavoidably leads to more meticulous division of labor, where every person has their own defined task, until every worker only needs to depend on some machine. As an acute Japanese writer observed more than 30 years ago, automation like this has caused "every individual to find their determined position in the great societal machine...This is the strongest organizational force in modern society. While it lies outside the confines of politics and law, it is nonetheless very powerful".

Did you notice the last two sentences one of the best definitions of Big Tech one may give? But I digress.

Automation does NOT create more USABLE jobs

The story goes that yes, automation creates alienation and eventually unemployment, but that's not a problem, because it always creates even more new, surely better jobs. Yeah, right. But for whom, and how usable? All this displacement by software and robots would not be a problem IF:

jobs were not the only way to pay one's rent, mortgage, food and clothes (or, put another way, if we didn't insist to get concepts like UBI and AI in reverse order)

ENOUGH decent jobs that COULD be taken by the RIGHT people, that is workers displaced by automation, COULD not just exist but above all be taken WHEN those workers would need; that is, as soon as possible, not after some magic "upskilling" that in most cases would have to be so advanced that it would fail in most cases, or so long that it would cost taxpayers much more than unemployment insurance

The reality today is (quoting someone else now , not me) that we won't be able to retrain the majority of the workforce fast enough to take the new jobs in emerging industries. Not in a world where, unlike in previous industrial revolutions, the older workers are much more than the younger(aspiring) ones, would need much more training, and "entire industries' disruptions in periods that are shorter than election cycles".

Automation isn't even "freeing time"

A while ago, I almost spit my tea when I read that automation is freeing time, because, "there is a sports equipment boom in the USA, and how was the time to use it freed, if not through machines?" One answer to this "argument" may be that those who can afford sport equipment, or state of the art gym memberships are less than those work of many more working poors. If automation is freeing time, how come most families are forced to have every adult member working full time just to make ends meet? Another answer may be that if there are so many people who lose weight by making drugs like Ozempic scarcer and more expensive for those who would really need them instead of practicing some sports, is because automation just gave them more money, but not enough time to spend it decently.

Side note on humanoid robot

While we are at this... Another symptom of how much confusion and hype there is on robotic automation and related issues is the interest, which I fail to share outside of science fiction, in humanoid robots. Most industrial robots or machines have no need whatsoever to be human-shaped, and never will. If they did, they'd probably be less effective, not more: "you don't make [humanoid] robots to fix all plumbing, you design all plumbing to be fixable by robots". Outside factories, what automation would be better? Better appliances, or housemaid-shaped robots that have the same probability of mastering the billion situations they would meet in real homes as "driverless" cars of becoming really able to drive autonomously on all real roads? Ditto for warfare, office work and so on...

Robots with similar shapes to animals would be much better, for at least two reasons. One is that certain animal shapes would actually be better for certain jobs. Four -legged or serpentine robots, for example, could explore and monitor caves, oil pipes, ship bilges and thousands more similar environments, much better than anything human shaped, human or robot.

The other and most important reason is that the less robots look like humans, the easier it is to avoid confusing what being human or machine means. Robots looking like humans make it easier to treat even humans like robots. Having "someONE", not someTHING, to dominate, or to alleviate one's need of actual humans, hardly seems an easier path to positive human growth. As proof of that, we can just look at the biggest, already existing market for "something that looks like a human to boss around":

What that study doesn't say

Back to the links between displacement and automation displacement by robots, AI or anything else, I recently read here, that "technology's displacement effects can be outweighed if new tasks are created where labor has an advantage over automation. This is a huge if."

No, that's not a huge "if". It's a huge "WHY???"

Let's be clear: we do need much more automation than today, albeit of a very different nature than most startuppers or venture capitalists would like you to buy. I am pretty sure that the miners of 150 or even just 50 years ago would give some year of life they couldn't enjoy anyway, displacement be damned, to work in the much better, much safer conditions that highly automatized mining can provide today. There are still plenty of actually necessary, but dangerous jobs that can and should use more automation. It's equally obvious that even the bare maintenance of existing vital infrastructures, from ports to bridges and power lines will need much more automation, if you consider how quickly populations are aging worldwide, and how many of their younger members, the ones best suited for certain jobs, are still blinded by getting a good degree, just to win a "great job".

This said, the same author who wrote:

"They only need to depend on a machine"

"working with computers completely offsets the negative consequences of automation for autonomy"

also left something critical out when he wrote something that science and technology "guaranteed the values of individualism" and "improved the status of individuals, increased individual self-consciousness, and strengthened the sense of individual responsibility".

The problem is that "need to depend" can mean two terribly different things: one is need to depending on having a machine to do one's work, be it a computer to blog, a circular saw to build cabinets or a synthesizer to play music. The other is to depend as in "subordinate, being managed by", without responsibilities.

It's just the difference between "working with" and "working for/under", but it's huge. The first scenario is good, the other is the dystopia where (regardless of its appearance) a computer is in charge, bossing a human around "at a robotic pace, with robotic vigilance"One gets increased individual self-consciousness and all that ONLY if and when what automation provides are tools that extend one's capabilities, not inhuman bosses and controllers.

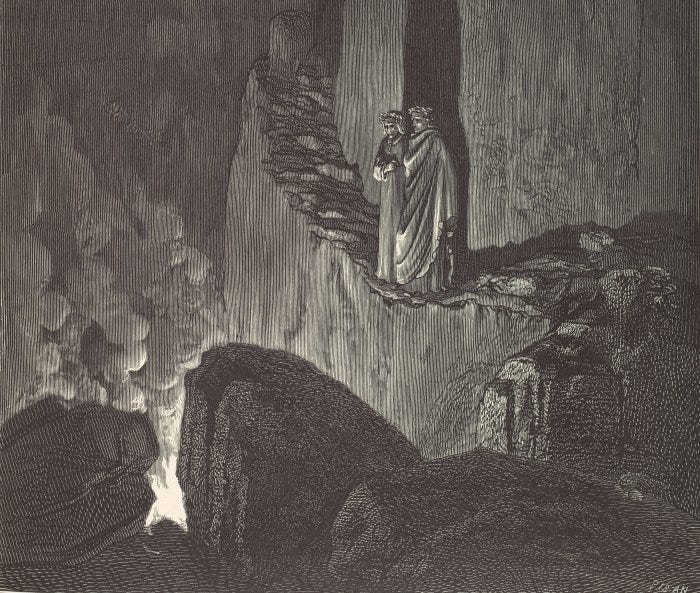

The final and most serious problem about automation that that study leaves unsaid is the one that Dante Alighieri explained seven centuries ago:

"Call to mind from whence ye sprang: 'Ye were not form'd to live the life of brutes, 'But virtue to pursue and knowledge high.' (Inferno, CANTO XXV)

There is no amount of control or "creative problem solving" that can avoid mental tolls if, as it happens more and more frequently, the end product or "mission" itself of one's job is inherently pointless or outright superfluous. I suspect that this is the displacement, lack of meaning or whatever you want to call it that automation often just exposes, rather than create: maybe automation scares a lot of people not (just) because it makes them lose their jobs, but because it makes impossible to ignore that their jobs themselves were worthless to begin with.

Of course, that's a problem only until full time work for every adult remains a dogma, and only for those who must absolutely have a job, instead of a purpose, to feel they are worth something.

Usual final call: the more direct support I get, the more I can investigate and share content like this with everybody who could and should know it. If you can’t or don’t want to do it with a paid subscription, you may fund me directly via via PayPal (mfioretti@nexaima.net), LiberaPay, or in any of the other ways listed here.

Substacks quoted here: SK Ventures and T. Greer.